Did you know that the global artificial intelligence (AI) market is projected to reach a value of $1,811.75 billion by 2030? As AI continues to transform industries and drive innovation, the demand for skilled AI engineers is skyrocketing. To excel in this rapidly evolving field, it is crucial to know the right tools and technologies for AI engineers to succeed.

Dive into the essential tools and technologies that every AI engineer should be familiar with. From machine learning algorithms to deep learning frameworks, data preprocessing techniques to natural language processing libraries and explore the key components that enable AI engineers to develop cutting-edge AI models and applications.

Key Takeaways:

- AI engineers need to stay up-to-date with the latest tools and technologies in order to meet the growing demand in the AI industry.

- Machine learning algorithms form the foundation of AI systems and enable machines to learn from data.

- Data preprocessing techniques are essential for cleaning and enhancing the quality of data used in AI models.

- Deep learning frameworks provide the infrastructure and tools needed to build and train deep neural networks.

- AI engineers should be familiar with model training methods such as supervised learning, unsupervised learning, and reinforcement learning.

Machine Learning Algorithms

Machine learning algorithms play a crucial role in the development of AI systems. These algorithms enable machines to learn from data, make predictions, and make informed decisions. As an AI engineer, it is essential to have a deep understanding of various machine learning algorithms to design effective AI models. Let’s explore some of the key machine learning algorithms:

1. Decision Trees

Decision trees are versatile algorithms that use a hierarchical structure to make decisions based on input features. They are widely used for classification and regression tasks in AI applications. Decision trees provide transparency and interpretability, making them valuable in scenarios where explainability is crucial.

2. Random Forests

Random forests are an ensemble learning technique that combines multiple decision trees to improve performance and reduce overfitting. Each tree in the forest independently makes predictions, and the final prediction is obtained by voting or averaging the individual predictions. Random forests are known for their robustness and ability to handle high-dimensional datasets.

3. Support Vector Machines (SVM)

Support vector machines are powerful algorithms used for classification and regression tasks. SVM finds the best hyperplane that separates different classes in the dataset, maximizing the margin between classes. SVM is effective in handling both linearly separable and non-linearly separable data through the use of kernel functions.

4. Neural Networks

Neural networks are the foundation of deep learning and emulate the structure of the human brain. They consist of interconnected layers of artificial neurons that process and transmit information. With their ability to learn complex patterns, neural networks have achieved remarkable success in various AI applications, such as image recognition, natural language processing, and speech recognition.

To gain a comprehensive understanding of these and other machine learning algorithms, AI engineers should delve into the strengths, weaknesses, and best use cases for each algorithm. This knowledge empowers AI engineers to select the most suitable algorithm for a specific AI task and optimize model performance.

| Algorithm | Use Case | Advantages | Disadvantages |

|---|---|---|---|

| Decision Trees | Classification, regression | Interpretability, handling nonlinear relationships | Prone to overfitting, unstable with small changes in data |

| Random Forests | Classification, regression | Reduces overfitting, handles high-dimensional data | Difficult to interpret, requires more computational resources |

| Support Vector Machines (SVM) | Classification, regression | Effective for high-dimensional data, handles both linear and nonlinear relationships | Less suitable for large datasets, sensitive to parameter choices |

| Neural Networks | Image recognition, natural language processing, speech recognition | Ability to learn complex patterns, adaptability to various tasks | Require large amounts of data, computationally intensive |

Data Preprocessing Techniques

Before feeding data into machine learning algorithms, it is essential to preprocess and clean the data to improve its quality and remove any noise or inconsistencies. Data preprocessing techniques are crucial in ensuring that the data used for training AI models is accurate and reliable.

AI engineers should be familiar with various data preprocessing techniques to handle different types of data challenges. Some common techniques include:

- Data Cleaning: Removing or fixing any errors, outliers, or duplicate data points that may interfere with the accuracy of the AI model.

- Data Normalization: Scaling numerical data to a standardized range to ensure fair comparison between features and prevent any feature from dominating the model.

- Feature Scaling: Transforming numerical features to a similar scale or distribution, avoiding the dominance of certain features due to their larger magnitude.

- Handling Missing Values: Dealing with missing or incomplete data points, either by imputing values or using advanced techniques to handle missing data.

By applying these data preprocessing techniques, AI engineers can ensure the integrity and reliability of their training data, leading to more accurate and robust AI models.

“Data preprocessing is a critical step in AI development, allowing you to transform raw data into a clean and usable format. It ensures that your AI models can make accurate predictions and decisions based on reliable data.”

Understanding and implementing these techniques can significantly improve the performance and effectiveness of AI models in various domains, from healthcare to finance and beyond.

Deep Learning Frameworks

Deep learning frameworks play a crucial role in AI development, providing the necessary infrastructure and tools for building and training deep neural networks. These networks are a key component of many AI applications, enabling advanced capabilities such as image recognition, natural language processing, and voice recognition.

Some popular deep learning frameworks used by AI engineers include:

- PyTorch

- TensorFlow

- Keras

These frameworks offer a wide range of functionalities and APIs that simplify the process of designing and implementing deep learning models. With their intuitive interfaces and extensive documentation, AI engineers can quickly prototype and deploy deep neural networks with ease.

PyTorch, developed by Facebook’s AI Research lab, is known for its dynamic computational graph, making it highly suitable for research and experimentation. TensorFlow, created by Google, provides a comprehensive ecosystem for AI development, with support for distributed training and deployment on various platforms. Keras, on the other hand, is a high-level API that runs on top of TensorFlow, offering a user-friendly interface for building neural networks.

Having hands-on experience with these deep learning frameworks empowers AI engineers to leverage the power of deep learning in their projects. Furthermore, these frameworks have active communities and vibrant ecosystems, making it easy to find support, learn new techniques, and stay up-to-date with the latest advancements in AI deep learning.

| Name | Features | Advantages |

|---|---|---|

| PyTorch |

|

|

| TensorFlow |

|

|

| Keras |

|

|

Model Training Methods

Model training is a crucial step in developing AI systems. It involves teaching an AI model to make accurate predictions or decisions based on data. AI engineers employ various model training methods, each with its own unique approach and suitability for different types of AI tasks.

In the realm of AI model training, three commonly used methods are supervised learning, unsupervised learning, and reinforcement learning:

- Supervised Learning: This method involves training an AI model using labeled data, where the input data and corresponding output labels are provided. The model learns to map input data to the correct output, enabling it to make accurate predictions on new, unseen data.

- Unsupervised Learning: Unlike supervised learning, unsupervised learning works with unlabeled data. The AI model learns patterns and structures within the data without any specific output labels. This method is suitable for tasks like clustering, dimensionality reduction, and anomaly detection.

- Reinforcement Learning: This method employs the concept of reward and punishment to train an AI model. The model interacts with an environment and learns to make decisions that maximize a reward signal. Reinforcement learning is commonly used for tasks like game-playing, robotics, and autonomous systems.

Each model training method serves a distinct purpose and has its own advantages and limitations. AI engineers must carefully select and implement the appropriate method based on the requirements of the AI task at hand.

Example: Comparison of Model Training Methods for Sentiment Analysis

To further illustrate the differences among model training methods, let’s consider a common AI task: sentiment analysis. The goal of sentiment analysis is to determine the sentiment (positive, negative, or neutral) expressed in a given text.

| Model Training Method | Advantages | Limitations |

|---|---|---|

| Supervised Learning | Utilizes labeled data for accurate sentiment classification. | Depends on the availability of labeled data, which can be time-consuming and expensive to acquire. |

| Unsupervised Learning | Does not require labeled data, allowing for exploration of latent sentiment patterns. | May produce ambiguous results due to the absence of ground truth labels. |

| Reinforcement Learning | Allows the model to learn from trial and error, improving accuracy over time. | Requires defining an appropriate reward system and conducting iterative training. |

As shown in the table above, supervised learning requires labeled data, making it suitable when sentiment labels can be readily obtained. Unsupervised learning, on the other hand, provides flexibility in analyzing sentiment without relying on labeled data, but may result in less accurate sentiment classification. Reinforcement learning allows the model to learn from interactions and refine its sentiment analysis abilities over time, but it requires careful reward system design and iterative training.

When choosing model training methods for sentiment analysis or any other AI task, it is essential to consider the availability of labeled data, the desired outcome, and the specific requirements of the project. By selecting the most appropriate method, AI engineers can enhance the accuracy and reliability of their AI models.

Natural Language Processing Libraries

In the field of artificial intelligence (AI), natural language processing (NLP) plays a vital role in enabling computers to understand and interact with human language. NLP libraries provide a comprehensive range of tools and algorithms that help AI engineers process text, analyze sentiment, and understand human language patterns.

Three popular NLP libraries that AI engineers should be familiar with are:

- NLTK (Natural Language Toolkit): NLTK is a robust Python library widely used for NLP tasks. It offers numerous tools for tokenization, stemming, lemmatization, part-of-speech tagging, sentiment analysis, and more.

- SpaCy: SpaCy is a powerful and efficient NLP library that offers advanced features such as named entity recognition, dependency parsing, and word vectors. It is designed to deliver fast and accurate results, making it a preferred choice for many AI professionals.

- Gensim: Gensim is a popular open-source library that specializes in topic modeling, document similarity analysis, and word embedding techniques. It provides an intuitive interface for working with large text datasets and building scalable NLP models.

The use of these NLP libraries empowers AI engineers to extract valuable insights from text data and build intelligent applications that can understand, interpret, and generate human language.

“NLP libraries like NLTK, SpaCy, and Gensim play a crucial role in processing text data and understanding the intricate nuances of human language. These libraries equip AI engineers with the necessary tools to unlock the true potential of AI-driven language processing applications.”

To get a better understanding of the capabilities and features offered by these NLP libraries, refer to the comparison table below:

| Library | Main Features | Programming Language | License |

|---|---|---|---|

| NLTK | Tokenization, stemming, lemmatization, POS tagging, sentiment analysis, named entity recognition | Python | MIT |

| SpaCy | Dependency parsing, named entity recognition, part-of-speech tagging, word vectors | Python | MIT |

| Gensim | Topic modeling, document similarity analysis, word2vec, fastText | Python | MIT |

By leveraging the capabilities of these natural language processing libraries, AI engineers can develop sophisticated language models, sentiment analysis systems, chatbots, language translators, and more, contributing to the advancement of AI technologies in various industries.

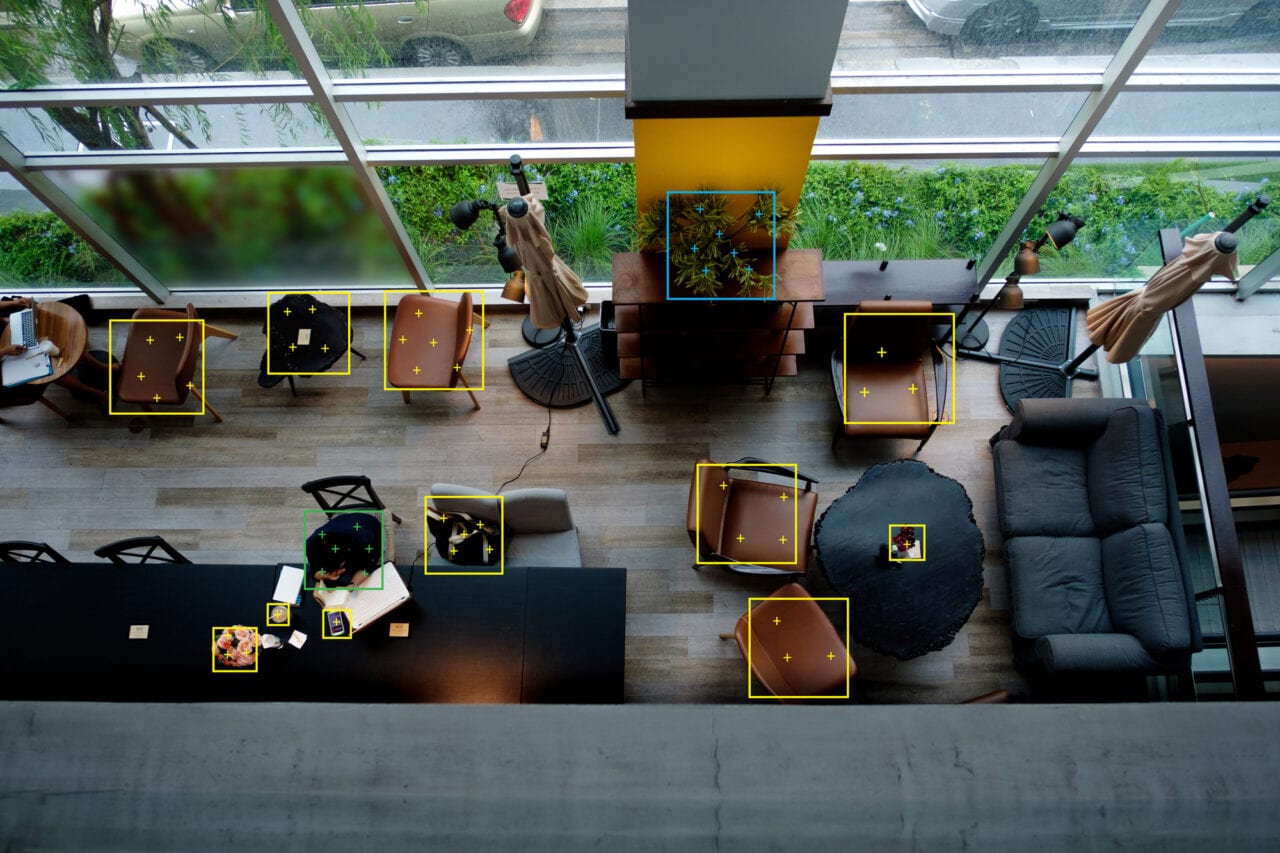

Computer Vision Software

Computer vision is a crucial aspect of AI, enabling machines to analyze and understand visual data. As an AI engineer, it is essential to be proficient in computer vision software to develop advanced AI applications. There are various computer vision software options available, each with its own set of features and capabilities.

OpenCV

OpenCV is a popular open-source computer vision library widely used by AI engineers. It offers a comprehensive set of algorithms and functions for image and video analysis, object detection and tracking, feature extraction, and more. OpenCV supports multiple programming languages, including Python, C++, and Java, making it accessible to developers across different platforms.

Dlib

Dlib is another powerful computer vision library commonly used in AI development. It provides a wide range of functions for facial recognition, image classification, object detection, and shape prediction. Dlib is known for its efficiency and robustness, making it a preferred choice for tasks such as face detection in real-time applications.

TensorFlow.js

TensorFlow.js is a JavaScript library developed by Google that brings the power of TensorFlow, an AI framework, to the web browser. It allows AI engineers to build and run computer vision models directly in the browser, eliminating the need for server-side processing. With TensorFlow.js, you can perform tasks like image classification, object detection, and style transfer seamlessly in web applications.

Incorporating computer vision software into your AI projects provides opportunities to develop innovative solutions in areas like autonomous driving, medical imaging, and surveillance systems. By leveraging the capabilities offered by OpenCV, Dlib, TensorFlow.js, and other computer vision software, you can unlock the full potential of AI computer vision and create impactful applications.

| Computer Vision Software | Main Functions | Languages/Frameworks Supported |

|---|---|---|

| OpenCV | Image and video analysis, object detection and tracking, feature extraction | Python, C++, Java |

| Dlib | Facial recognition, image classification, object detection, shape prediction | C++, Python |

| TensorFlow.js | Browser-based AI, image classification, object detection, style transfer | JavaScript |

AI Model Evaluation

Evaluating the performance of AI models is crucial to ensure their accuracy and effectiveness. As an AI engineer, you need to be familiar with various model evaluation techniques to assess the performance of your AI models. These evaluation techniques provide insights into how well your models are performing and help identify areas for improvement and optimization.

When it comes to AI model evaluation, there are several key metrics and methodologies that you should be acquainted with:

- Accuracy: This metric measures how well your model predicts the correct output. It is calculated by dividing the number of correctly predicted instances by the total number of instances.

- Precision: Precision quantifies the proportion of true positive predictions out of all positive predictions. It indicates how well your model avoids false positives.

- Recall: Recall, also known as sensitivity or true positive rate, measures the proportion of true positive predictions out of all actual positive instances. It assesses how well your model avoids false negatives.

- F1 score: The F1 score combines precision and recall into a single metric. It provides a balanced measure of an AI model’s accuracy.

- ROC curves: ROC curves, short for receiver operating characteristic curves, are used to evaluate the performance of binary classification models. They visualize the trade-off between the true positive rate and the false positive rate at various classification thresholds.

By leveraging these evaluation techniques, you can gain a deeper understanding of your AI models’ strengths and weaknesses. This knowledge is invaluable for making informed decisions on model optimization, feature engineering, and hyperparameter tuning.

Neural Network Architectures

Neural networks play a pivotal role in AI models, particularly in deep learning. As an AI engineer, it is crucial to have a deep understanding of different neural network architectures. These architectures enable you to design powerful and effective AI systems that can tackle complex tasks with remarkable accuracy. Let’s explore three important neural network architectures:

1. Convolutional Neural Networks (CNNs)

CNNs are widely used for image and video processing tasks. They excel at detecting patterns and extracting features from visual data. By applying convolutional layers, pooling layers, and fully connected layers, CNNs can recognize objects, classify images, and perform tasks like image segmentation and style transfer.

2. Recurrent Neural Networks (RNNs)

RNNs are designed to process sequential data, making them ideal for natural language processing and time series analysis. Unlike feedforward neural networks, RNNs have feedback connections, allowing them to handle variable-length input and access previous information. RNNs are capable of tasks such as machine translation, sentiment analysis, and speech recognition.

3. Generative Adversarial Networks (GANs)

GANs consist of two neural networks: a generator and a discriminator. The generator generates synthetic data that resembles real data, while the discriminator tries to distinguish between real and fake data. Through their adversarial training process, GANs can produce highly realistic and creative outputs, such as realistic images, music, and even text.

Understanding different neural network architectures is integral to developing innovative AI systems across various domains. By leveraging the unique capabilities of CNNs, RNNs, and GANs, you can address complex AI challenges and push the boundaries of what is possible.

Now, let’s take a closer look at the characteristics and applications of each neural network architecture:

| Neural Network Architecture | Characteristics | Applications |

|---|---|---|

| Convolutional Neural Networks (CNNs) | – Convolutional layers for feature extraction – Pooling layers for downsampling – Fully connected layers for classification |

– Image classification – Object detection – Image segmentation – Style transfer |

| Recurrent Neural Networks (RNNs) | – Feedback connections for sequential data processing – Long Short-Term Memory (LSTM) cells for capturing long-term dependencies – Gated Recurrent Units (GRUs) for efficient memory |

– Natural language processing – Machine translation – Sentiment analysis – Speech recognition |

| Generative Adversarial Networks (GANs) | – Generator network for synthesizing data – Discriminator network for distinguishing real and fake data – Adversarial training process for improving the generator |

– Image synthesis – Music generation – Text generation – Data augmentation |

By mastering these neural network architectures, you will have the tools to create AI models that excel in tasks specific to their respective domains. Empowered by the capabilities of CNNs, RNNs, and GANs, you can unleash your creativity and drive innovation in the field of artificial intelligence.

Programming Languages for AI

As an AI engineer, it is crucial to have a strong command of programming languages commonly used in AI development. These languages provide the foundation for creating efficient and scalable AI code. While there are several programming languages suitable for AI, Python stands out as the most popular choice among AI engineers.

Python’s simplicity and readability make it an excellent language for beginners, while its vast array of libraries and frameworks, such as NumPy, TensorFlow, and PyTorch, enable AI engineers to leverage powerful AI functionalities. Additionally, Python’s strong community support ensures access to extensive documentation, tutorials, and resources.

While Python takes the lead, other programming languages also find their place in AI development. R, known for its statistical computing capabilities, is widely used in data analysis and machine learning tasks. Java, as a general-purpose programming language, offers robustness and scalability for AI applications. C++ provides efficient performance and is often used in computationally intensive AI tasks.

By mastering these programming languages, AI engineers can unlock the full potential of AI development and effectively implement complex algorithms, manipulate data, and build scalable AI models.

| Programming Language | Frameworks & Libraries |

|---|---|

| Python | PyTorch, NumPy, TensorFlow, scikit-learn, Keras |

| C++ | TensorFlow, Caffe, OpenCV |

| Java | Deeplearning4j, Weka |

| R | Caret, TensorFlow, Keras |

| Julia | Flux.jl, TensorFlow.jl |

| MATLAB | MATLAB Deep Learning Toolbox |

| JavaScript | TensorFlow.js |

| Scala | Spark MLlib |

Main Languages by Use:

| Programming Language | Key Features and Benefits |

|---|---|

| Python |

|

| R |

|

| Java |

|

| C++ |

|

Development Tools and Environments

To streamline your AI development process, you need access to a variety of development tools and environments. These tools provide an integrated and efficient workflow, enabling you to code, debug, and test your AI models seamlessly.

Here are some essential development tools that every AI engineer should be familiar with:

- Jupyter Notebook: Jupyter Notebook is a popular open-source web application that allows you to create and share interactive code documents. It supports multiple programming languages, including Python, R, and Julia, and provides an interactive environment for experimenting with AI models.

- PyCharm: PyCharm is a powerful IDE specifically designed for Python development. It offers intelligent code completion, debugging tools, and integration with version control systems. With PyCharm, you can write clean and efficient AI code with ease.

- Visual Studio Code: Visual Studio Code is a lightweight and versatile code editor that supports a wide range of programming languages. It comes with built-in debugging capabilities and an extensive library of extensions that enhance AI development workflows.

Version control systems play a crucial role in managing collaborative AI projects. Git, a widely-used version control system, allows multiple developers to work on the same codebase simultaneously. Platforms like GitHub provide a centralized hub for hosting and sharing code, facilitating seamless collaboration and version management.

Example:

By leveraging the power of these development tools and environments, AI engineers can accelerate their AI development process and deliver high-quality AI solutions.

| Development Tool | Description |

|---|---|

| Jupyter Notebook | An open-source web application for creating and sharing interactive code documents. |

| PyCharm | A powerful IDE specifically designed for Python development with intelligent code completion and debugging tools. |

| Visual Studio Code | A lightweight code editor with built-in debugging capabilities and extensive library of extensions. |

| Git | A version control system that enables collaborative code development and management. |

| GitHub | A platform for hosting and sharing code, facilitating seamless collaboration and version management. |

Hardware Acceleration

AI models often require significant computational power to train and run efficiently. As an AI engineer, it is crucial to be familiar with hardware acceleration technologies that can significantly speed up AI computations. Two popular options for hardware acceleration in AI are NVIDIA GPUs and cloud-based GPU instances.

NVIDIA GPUs:

When it comes to hardware acceleration for AI, NVIDIA GPUs are widely recognized for their exceptional performance and scalability. These powerful GPUs are specifically designed to handle the demanding computational requirements of AI workloads, providing significant speed boosts to model training and inference. By leveraging the parallel processing capabilities of NVIDIA GPUs, AI engineers can reduce training times and iterate on their models more quickly.

Cloud-based GPU Instances:

Cloud-based GPU instances offer a flexible and scalable solution for hardware acceleration in AI development. Cloud companies like Microsoft Azure, Amazon Web Services (AWS), and Google Cloud Platform (GCP) offer GPU instances that can be easily provisioned on an as-needed basis. This allows AI engineers to access high-performance GPUs without the upfront costs and infrastructure management associated with dedicated hardware. Cloud-based GPUs provide the necessary computational power to train and deploy AI models efficiently, enabling seamless experimentation and scalability.

By utilizing hardware acceleration technologies like NVIDIA GPUs or cloud-based GPU instances, AI engineers can significantly enhance the speed and efficiency of AI computations. This empowers them to iterate quickly, experiment with different models, and deliver AI solutions with optimal performance.

| Hardware Acceleration Technology | Key Features |

|---|---|

| NVIDIA GPUs | Exceptional performance and scalability Parallel processing capabilities Reduces training times |

| Cloud-based GPU Instances | Flexible and scalable solution On-demand provisioning No upfront costs Accessible high-performance GPUs |

AI Frameworks and Libraries

To accelerate your AI development process and harness the power of cutting-edge AI algorithms, leveraging pre-built AI frameworks and libraries is essential. These frameworks and libraries provide a wide range of functions and tools for building, training, and deploying AI models. By utilizing these resources, you can streamline your development workflow and unlock the full potential of AI technology.

Some of the most popular AI frameworks and libraries used by AI engineers include:

- H2O.ai

- TensorFlow

- PyTorch

- Keras

- Caffe

- MXNet

These frameworks and libraries offer comprehensive support and extensive documentation, making it easier for AI engineers to implement complex AI algorithms and models. Whether you’re working on deep learning, computer vision, natural language processing, or any other AI domain, these frameworks and libraries provide the necessary tools to build robust and high-performance AI solutions.

Comparison of AI Frameworks and Libraries

| Framework/Library | Key Features | Supported Languages | Community Support |

|---|---|---|---|

| H2O.ai | AutoML capabilities, distributed computing, model interpretability | R, Python | Active community support |

| TensorFlow | Flexible and scalable deep learning, extensive ecosystem | Python, C++, Java, Go | Largest AI community support |

| PyTorch | Dynamic computational graphs, easy debugging and prototyping | Python | Growing community support |

| Keras | High-level API, easy model creation and training | Python | Wide community support |

| Caffe | Efficient deep learning, model zoo with pre-trained models | C++, Python | Active community support |

| MXNet | Scalable and efficient deep learning, flexible programming interface | Python, C++, R, Julia, Scala, Perl | Active community support |

These frameworks and libraries serve as powerful tools for AI engineers, enabling them to bring their AI projects to life quickly and efficiently. By incorporating these resources into your workflow, you can accelerate AI development and stay at the forefront of AI innovation.

AI Development Best Practices

AI development is a complex and iterative process that requires the application of best practices to ensure the creation of robust and effective AI systems. By following these best practices, AI engineers can build reliable, fair, and ethically sound AI technologies. Here are some key best practices for AI engineers to consider:

- Proper Data Governance: Implementing strong data governance practices ensures that the data used for training AI models is accurate, reliable, and free from bias. This includes data collection, storage, and management processes that prioritize data quality, privacy, and security.

- Model Explainability: As AI models become more complex, it is crucial to retain transparency and explainability in their decision-making processes. AI engineers should focus on designing models that can provide clear explanations of their outputs, enabling users and stakeholders to understand and trust the AI system’s decisions.

- Ethical Considerations: AI development should adhere to ethical guidelines and principles to ensure the responsible and ethical use of AI technologies. AI engineers should prioritize fairness, accountability, and transparency to mitigate potential biases and prevent inadvertent harmful consequences.

- Continuous Learning and Improvement: The field of AI is constantly evolving, and AI engineers must stay updated with the latest advancements, research, and industry trends. By fostering a culture of continuous learning and improvement, AI engineers can enhance their skills, explore new techniques, and deliver innovative AI solutions.

Adhering to best practices in AI development is essential for building reliable and effective AI systems. Proper data governance, model explainability, ethical considerations, and continuous learning contribute to the creation of trustworthy and ethical AI technologies.

| Best Practices | Benefits |

|---|---|

| Proper Data Governance |

|

| Model Explainability |

|

| Ethical Considerations |

|

| Continuous Learning and Improvement |

|

Conclusion

In conclusion, to excel in the field of AI, AI engineers need to have extensive knowledge and proficiency in a range of tools and technologies. From machine learning algorithms to programming languages, staying up to date with the latest developments and advancements in AI tools and technologies is crucial.

By harnessing these tools and technologies, AI engineers can drive innovation and create impactful AI solutions that address real-world challenges. The use of machine learning algorithms, data preprocessing techniques, deep learning frameworks, and natural language processing libraries enable the development of cutting-edge AI models and applications.

Understanding model training methods, neural network architectures, and using the right programming languages for AI development is essential for building efficient and scalable AI systems. Additionally, leveraging development tools, hardware acceleration, and AI frameworks and libraries streamlines the AI development process, allowing AI engineers to iterate and experiment more effectively.

To achieve success in the field of AI, it is also important for AI engineers to follow AI development best practices, considering factors like proper data governance, model explainability, ethical considerations, and continuous learning and improvement. By adhering to these principles, AI engineers can ensure the reliability, fairness, and ethical use of AI technologies, ultimately making a positive impact in the AI landscape.